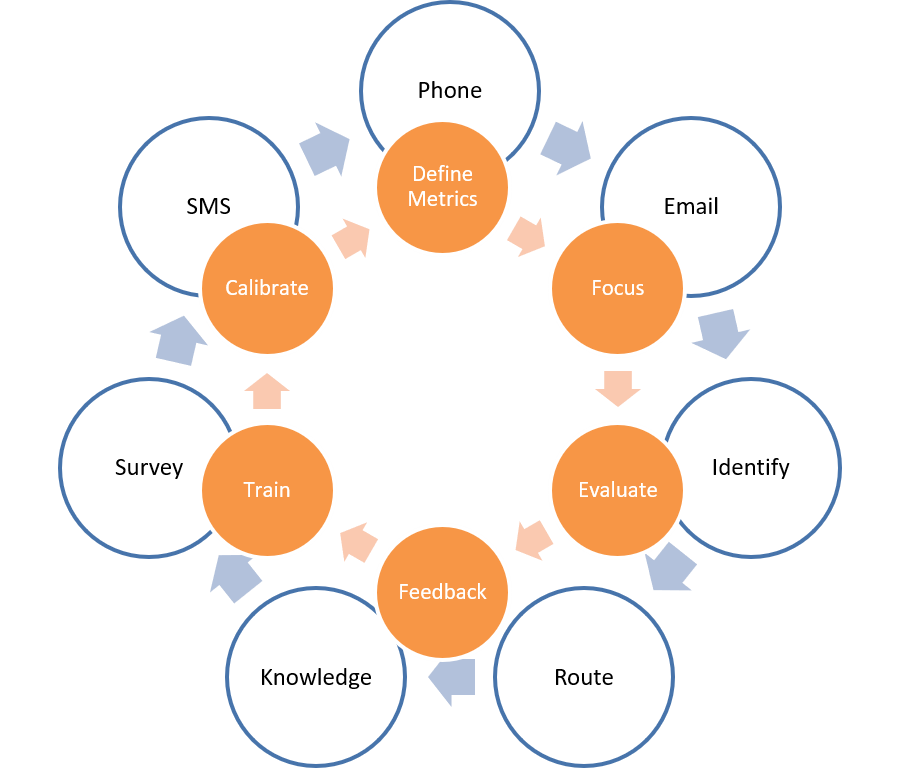

The Omni QM Process

In this guide, we will take a close look at effective ways to use Omni QM to create and implement strong quality management processes for your contact center, and help your contact center achieve its quality management goals.

Quality management systems use interrelated quality management processes to achieve consistent results that help organizations understand activities, optimize the system, and improve performance.

Quality management processes typically cover the following parts of quality management:

- Define metrics: Deciding which metrics we want to measure and how we define success

- Focus: Selecting which interactions we want to measure and evaluate

- Evaluate: Determining how interactions are evaluated

- Feedback: Providing feedback to supervisors

- Train: Having agents accept evaluations or be trained

- Calibrate: Calibrating evaluators

Defining What to Measure and Improve to Reach Company Goals

In the quality planning process, management provides contact centers with company-wide goals, which can include being compliant with industry standards, improving customer satisfaction and experience, achieving employee accuracy and efficiency, and so forth. Using these goals, contact centers then define aspects of conversation that the company wants to measure and improve, define what successful quality management is, and decide which interactions will be measured.

Because customer experience is the backbone of quality management, we recommend starting your quality planning process by measuring key performance indicators (KPIs), such as Customer Satisfaction (CSAT), Net Promoter Score (NPS), and sentiment, all of which rate how customers feel about a company and their customer experience.

CSAT is a numeric score that shows how satisfied the customer is with the organization, product, service, and/or overall customer experience. NPS is a metric used in customer experience to measure a customer’s loyalty to an organization, product, or service. Sentiment is how the customer feels about their experience.

Roles

Quality management systems help people work together to achieve common goals. In any organization, there are many departments, each with their own hierarchy of employees. In a contact center, a typical structure may consist of employees who have been assigned to work as administrators, managers, supervisors, or agents, where all employees play a specific role and perform specific tasks within teams in their department.

A role is a combination of privileges (i.e., permissions) that allow a user to access specific functionality within the contact center. Roles, therefore, define the type of work that users are allowed to do.

The ability to assign roles to users is a key component of Bright Pattern Contact Center, which offers many roles out of the box. The implementation of Omni QM enhances the existing roles with access to quality management features, including QM-specific privileges and access to the Quality Management section in Agent Desktop. Omni QM also adds two special roles specifically dedicated to quality management: Quality Evaluator and Quality Evaluator Admin.

In Omni QM, the most important roles are Agent, Supervisor, Quality Evaluator, and Quality Evaluator Admin:

- Agents help customers.

- Supervisors monitor and mentor agents to help them do their job better.

- Quality Evaluators assess interactions for quality and provide feedback to Supervisors and Agents.

- Quality Evaluator Admins ensure that all users have the tools and resources to do their job, and they provide leadership and direction.

The Agent role is meant for users who will be dealing with customers directly. With Omni QM, agents have the ability to evaluate their own interactions, if granted a special privilege, as well as see evaluations of their interactions performed by other people.

The Supervisor role is meant for users who will direct and help agents for the purpose of ensuring maximum performance from each team member. With Omni QM, supervisors have the ability to review agent interactions as well as confirm the evaluations of agents from teams they supervise.

The Quality Evaluator role is meant for users who will focus on evaluating agents and supervisors for quality management purposes. This role is specific to Omni QM. Privileges include being able to evaluate agent interactions, see evaluations completed by others, and delete evaluations completed by themselves.

The Quality Evaluator Admin role is meant for users who will be responsible for managing quality management for a contact center. This role is specific to Omni QM. The role includes all privileges available for the Quality Evaluator role, as well as the ability to edit evaluation forms and public interaction searches, assign evaluations and calibrations to evaluators, manage evaluations across teams, delete any evaluations, and so forth.

Focusing On Interactions That Are Most Likely to Require Evaluation

In an omnichannel contact center, agents will resolve customer requests on a variety of services and on any type of media channel (e.g., voice, chat, video, SMS, social messenger, etc.), sometimes simultaneously if the agents have the capacity to handle them. Agents may handle hundreds of service requests every day. When there are so many interactions and interaction types, how does a contact center even begin to evaluate them?

Fortunately, Omni QM gives you the power to evaluate as many or as few interactions as you want, for any service or media channel, with any combination of search criteria. Omni QM provides 100 percent coverage of interactions with keyword search and sentiment analysis.

Omni QM lets evaluators sample interactions by setting granular search conditions in Eval Home, a dedicated quality management section of the Agent Desktop application. Eval Home features include search preset buttons, saved searches folders, the ability to configure specific search conditions and blocks of search conditions, as well as a comprehensive search results screen that lets you customize what data is presented. Evaluations may be performed directly or be assigned to other evaluators to complete.

Once interactions are evaluated, quality management reports such as Actual Evals, Disputed Evaluations, Eval Areas, and Evaluator Performance can be useful for further reviewing and refining how evaluations are selected.

Using Evaluations to Identify Areas that Need Improvement

You can design evaluations that will help you determine whether your goals are being met. An evaluation is the formal process of analyzing customer interactions and identifying areas that need improvement.

Expressing KPIs in Your Evaluation Forms

Omni QM evaluations begin with configurable evaluation forms, which are sets of evaluation areas/questions that are used to evaluate and grade interactions between agents and customers. Forms are used to define meaning—forms are a reflection of the company-wide quality KPIs that you want to measure.

Evaluation forms are created and customized in the Contact Center Administrator application by users with the Quality Evaluator Admin role.

Your evaluation forms should be tailored to the role of the employee being evaluated (e.g., Agent, Supervisor, etc.) and the service being provided. They should contain specific evaluation areas that address any important points from your quality management goals. (See section Evaluation Areas for more information.)

Evaluation forms are the basis for many Omni QM quality management reports, including Actual Evals, Answer Frequency, Disputed Evaluations, Eval Areas, Question Averages, and so forth. Because they are so configurable, Omni QM evaluation forms allow you to pinpoint the most important things to your company, and then extract the data for analysis and further evaluation.

Evaluation Areas

Evaluation areas are groups of questions on evaluation forms that are designed to assess specific areas of agent performance.

Common evaluation areas include the following:

- Addressing concerns - Does the agent respond to the customer’s main concerns?

- Closing the interaction - Does the agent end and complete the interaction appropriately?

- Communication - Is the agent clear and courteous to the customer?

- Discovery - Does the agent listen to the customer to learn about their concerns?

- Identification and verification - Does the agent correctly identify account information?

- Offer - Does the agent qualify prospective customers?

- Procedures - Does the agent follow procedures to hold, transfer, and so forth, when using the software?

- Professional behavior - Is the agent professional, calm, and in control when interacting with the customer?

- Resolution - Does the agent use resources properly to help fulfill the customer’s needs?

- Starting the conversation/greeting - Does the agent greet the customer in a friendly, positive way?

- Upsell/cross-sell - Is the agent knowledgeable about the product/service and able to suggest/sell other offerings?

- Written language - Is the agent’s writing easy to understand and correct for the given media channel?

Evaluation areas are a great way to address specific goals. For example, one of your company’s goals might be ensuring that all customers are greeted in a friendly manner. To address this in a form, you might create an evaluation area called “Greetings,” and each of the questions in the area will focus on specific aspects of greetings (e.g., required dialog, vocal tone, saying your company’s name, etc.).

As a Quality Evaluator Admin, you might want to create evaluation forms with common evaluation areas for all employees to use. Omni QM enables you to use configured evaluation areas across multiple evaluation forms. The ability to share evaluation areas and questions helps to promote consistency across forms and relieve quality evaluator admins of repetitious work when designing evaluation forms.

Sharing evaluation areas can ensure that the most important elements of your goals are included across all forms; very important areas may be weighted, while less important areas may be excluded from grading. Weight is how much of that question will contribute to the area; weight is relative to all other areas of the form. Moreover, individual questions may be configured to have a bonus or penalty, or even fail an evaluation area.

Determining Quality Score

After evaluations are conducted and accepted, how can an agent or supervisor understand their service quality standing? By checking their quality score.

Located in Agent Desktop’s Home Screen, the Quality Score widgets display the average score of selected evaluation areas from agent and supervisor interactions that have been evaluated; the score can be fixed to a set time interval (e.g., 30 days). Additionally, agents will see their quality score as well as the score for various evaluation areas in Eval Home (i.e., rather than in interaction search options).

The purpose of a quality score is to provide agents, supervisors, and other employees who are evaluated a quick way to understand the quality of their interactions. A high score will indicate an agent understands what is most important to the company and is able to provide this to customers. A low score will indicate further training or mentoring is required. For quality evaluators, the Score Report may be used to review evaluations of a particular agent, which can be helpful in such cases.

Conducting Evaluations

The overall Omni QM evaluation process involves all roles and includes evaluation form creation, interaction search and selection, evaluation, confirmation/feedback, and calibration.

Filling out Evaluation Forms

During the evaluation period, evaluation forms are filled out in Eval Console by users with the Quality Evaluator or Quality Evaluator Admin role. This means that the evaluator answers evaluation form questions while reviewing the interaction.

Depending on the interaction type, evaluators can review screen or call recordings, voice and chat transcripts, and so forth. On playback, recordings can be sped up or slowed down, and evaluators can leave notes to provide feedback to the agent. While filling out evaluation forms, evaluators can leave comments or include attachments that agents can refer back to. Evaluators can leave unfinished evaluations as drafts and bookmark completed evaluations to return to for reference.

For more information, see Conducting Evaluations.

Self-Evaluations

Self-evaluation is an important feature that lets privileged users review and grade their own interactions for quality in the given evaluation areas. Self-evaluations give contact centers a way to address more interactions and increase coverage.

This feature is enabled when users are given a special Omni QM privilege. The self-evaluation privilege is allowed with certain roles automatically but can be enabled for others as needed.

How does it work? Privileged users use evaluation forms to grade their own interactions, quality evaluators or supervisors review the self-evaluations, and the evaluations are either accepted or rejected/disputed. Disputed evaluations are good indicators that either the agent’s training or the forms needs to be reviewed or calibrated.

In self-evaluation environments, certain reports can be useful for improving training or forms, such as Disputed Evaluations, All Question Comments, Answer Frequency, Score Report, and so forth.

Improving Quality: Learning from Evaluations and Training

After interactions are evaluated, all contact center roles have the opportunity to get feedback regarding evaluations, learn about the evaluation areas that need improvement, and re-train, as necessary. We call this the quality improvement process, and it generally includes receiving evaluations with feedback, understanding quality score, and calibrating the evaluation process.

Confirmation: Providing Feedback to Agents

When evaluations are done, agents will be alerted of the evaluations when they visit Eval Home in the Agent Desktop application. Upon reviewing the evaluation of their interaction, agents enter the confirmation process. Also known as feedback, confirmation is the process of an agent receiving the evaluation of their interaction, with feedback and the quality score given by the quality evaluator, and either accepting or rejecting the score.

Confirmation gives the supervisor the opportunity to review the evaluation form with an agent, and it gives the agent the chance to accept that they have to be open to following the provided notes and completing the training. Enabling confirmations in your contact center keeps evaluators, agents, and supervisors connected in the evaluation process.

Confirmation can be added to the regular evaluation process. It is enabled when confirmation rules are configured. These rules are settings that require either agents or supervisors to either accept or reject an evaluator's score of an interaction. For example, when confirmation rules are enabled and an evaluation is not accepted, the evaluation goes back to the evaluator.

Calibration: Making the Evaluation Process Fair

Calibration is the process of ensuring that the same things are created similarly. In the context of quality management, calibration is used to ensure that evaluators review interactions and fill out evaluation forms in an equitable, fair way. If agent performance meets your company’s needs but you feel that forms and training could be improved, using Omni QM’s calibrations feature will help.

The process for conducting calibrations works in the same way as conducting evaluations. Quality evaluator admins search for interactions, and then, rather than assigning interactions to evaluators, evaluators are assigned to calibrate. Calibrating an interaction has no effect on interaction or agent score but allows you to see how different evaluators interpret their training and approach various evaluation areas. For example, if three out of four evaluators grade interactions consistently with a specific evaluation form but the fourth evaluator is inconsistent, calibrations will allow you to identify this and retrain as necessary.

Note that calibrations may be conducted for regular interactions or for previously evaluated interactions. If this is the case, you can review why your evaluators choose different options and then retrain or change evaluation forms for clarity.

In the calibration process, we recommend that all calibrations be completed before the next calibration meeting. Another useful tool to help with calibrations is the Calibrations report, which shows all calibrations per form, per user, and any point deviation (i.e., numerical).

QM Reports include the Disputed Evaluations report, which displays all disputed evaluations for quick reference. Additionally, you may find it helpful to review the All Question Comments report as well as Evaluator Performance report.