How to Complete Calibrations

Just as evaluations can be assigned to you, your quality evaluator admins can assign you to conduct calibrations for interactions. A calibration is an evaluation of an interaction but it does not count towards the interaction’s evaluation score. Interactions are calibrated by the evaluators of a team.

Your quality evaluator admins can assign any number of team evaluators to calibrate an interaction, which allows them to identify and fine-tune how evaluations are conducted, especially in difficult evaluation areas. For example, if three out of four team evaluators grade interactions consistently with a specific evaluation form but the fourth team evaluator is inconsistent, calibrations will allow your admins to identify this and retrain as necessary.

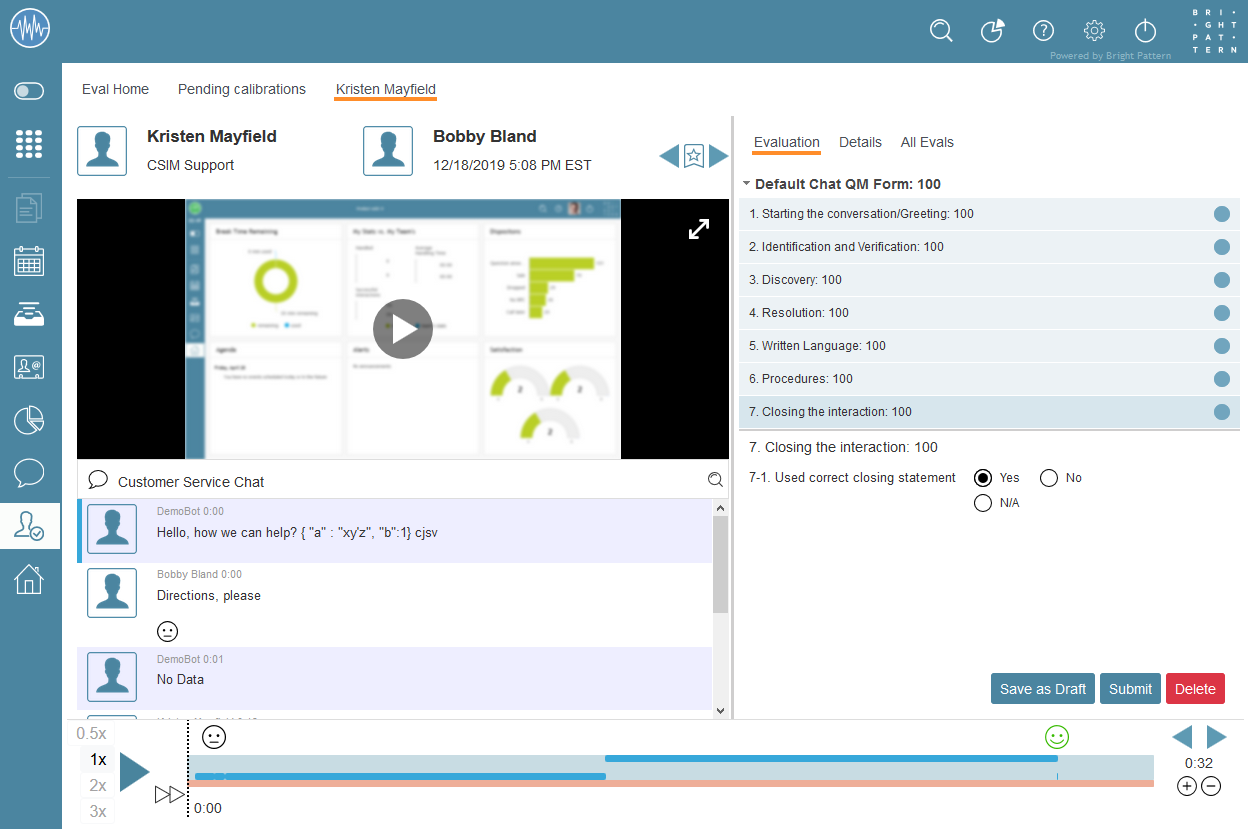

The process of calibrating an interaction is identical to completing an evaluation. Click the Pending calibrations search preset button to see the interactions in the search results screen, then double-click on an interaction to view it in Eval Console.

On the right side of the screen, under the Evaluation tab, select the appropriate evaluation form (if necessary), then begin answering all the questions in the available evaluation areas. When you are finished answering all evaluation form questions, click the Submit button. Note that you may save a calibration in progress as a draft.