AI Provider Integration

AI Provider integrations allow you to take advantage of language models from third-party AI providers in voice and chat scenarios. These models allow the AI Agent scenario block to hold purposeful conversations with customers and extract relevant data to scenario variables, which can then be used for further automation.

Each AI Provider offers a number of language models with varying capabilities. The following AI Providers are supported:

| Learn how to add an AI integration account and use the AI Agent scenario block in your scenarios with the AI Agent tutorial | ||

Common Properties

The following properties are available for all AI provider integration accounts.

Name

The unique name of this integration account, used to identify it elsewhere in the system. Because you can have multiple integration accounts of the same type, it is recommended to use a descriptive name.

Type

Read-only property indicating the type of integration account, e.g. OpenAI or Google Vertex.

Advanced Options

Use the Advanced Options property to enter a JSON-formatted object to configure API parameters that are not explicitly provided in the standard integration settings. This allows you to access specific model capabilities (e.g., "thinking" modes, reasoning_effort), adjust token limits (e.g., max_completion_tokens), or modify other API parameters without waiting for changes to the integration. To modify this property, you must have the Configure system-wide settings privilege.

Always use the Test Connection button to verify that the configuration provided in Advanced Options is valid.

Take note of the following regarding validation and usage:

- Certain settings, especially those that enable "thinking modes" or increase the "thinking budget" or "max tokens", can significantly impact the cost of model usage. Make sure you understand the implications before changing the advanced options.

- Settings defined here apply globally wherever this integration account is used (e.g., AI Agent blocks, Intelligence Engine).

- The input must be a valid JSON object (e.g.,

{"temperature": 0.5, "top_p": 0.9}).

- The system validates JSON syntax only. It does not verify if the parameters are semantically correct or supported by your specific AI provider and model. You are responsible for ensuring the options are valid for the selected model.

- The following options are system-managed and cannot be set via Advanced Options. Validation will fail if any of these are included in Advanced Options:

model,response_format,responseMimeType,responseSchema, ortools.

- Explicitly remove a parameter from the API request payload by setting it to

null(e.g.,"max_tokens": null).

- Order of application of options:

- Block Settings: Parameters explicitly defined in a scenario block (specifically Temperature) take the highest precedence.

- Advanced Options: Valid options defined here override the system's hardcoded defaults.

- Integration value: If a parameter is not defined in the block or in Advanced Options, the value defined by the integration is used.

- Provider specifics:

- OpenAI, Anthropic, Custom: Options are merged into the top-level JSON request payload.

- Google Vertex AI: Options are treated as part of the

generationConfigsub-JSON.

Advanced Option Examples

The following examples demonstrate common use cases for modifying the Advanced Options configuration. However, these examples will not work for every model. Consult the API documentation for the specific model and AI provider before configuring the Advanced Options.

- Removing and Adjusting Model Parameters

- If a model deprecates

max_tokensin favor ofmax_completion_tokensand requires a fixed temperature, you can configure the account to nullify the old parameter and set the new requirements.

{ "max_tokens": null, "max_completion_tokens": 1000, "temperature": 1 }

- Disabling Vertex AI Thinking

- For Google Vertex models (e.g., Gemini-2.5-flash) that enable "thinking" by default, you can disable the feature to manage costs or latency by setting the budget to zero.

{ "thinkingConfig": { "thinkingBudget": 0 } }

- Increasing Token Limits

- If the initial Discovery fails because the default token limit (e.g., 1000) is too low for a model, you can increase the limit.

{ "max_tokens": 8096 }

Test Connection

Click to verify that Bright Pattern Contact Center can connect to the AI provider account using the credentials.

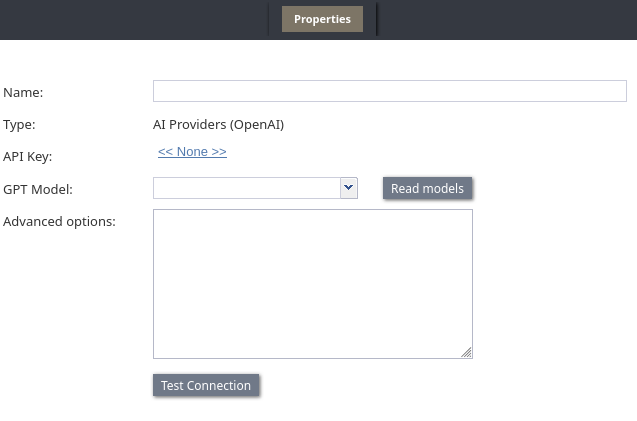

OpenAI Properties

After adding an OpenAI account, the properties dialog will appear. Enter the API key for your OpenAI account and click the Read Models button to retrieve the list of GPT models that can be accessed from your account. Once configured, the account can be selected as an AI Provider in an AI Agent scenario block.

API Key

The OpenAI API key to use with this integration account. The API keys page of your OpenAI account allows you to generate API keys.

GPT Model

Select the GPT model that this integration account will provide. If the list is empty, click the Read Models button to retrieve the list of models. Refer to the OpenAI models documentation for information about model capabilities and pricing.

Read Models

Click this button to refresh the list of GPT models available to your OpenAI account.

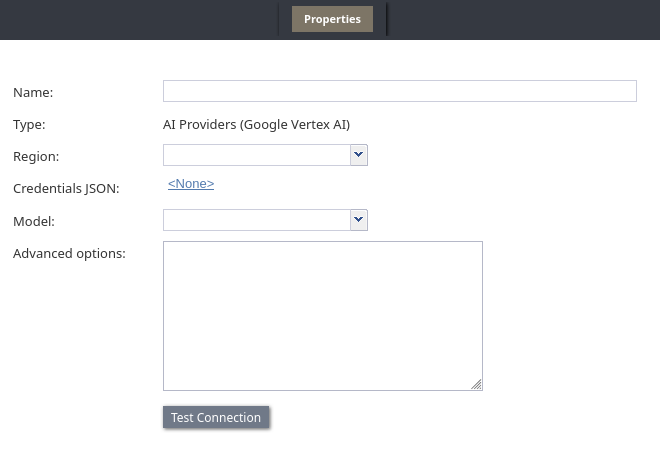

Google Vertex AI Properties

After adding a Google Vertex AI account, the properties dialog will appear. In Properties, you will upload the JSON-formatted key file for the service account, select the desired region, and choose the model. Once configured, the account can be selected as an AI Provider in an AI Agent scenario block. Note that Vertex AI must be enabled for the Google Cloud Platform project associated with the service account used for the integration.

Region

Select the Google Cloud region for your Vertex AI instance from the dropdown list. The list is automatically populated with available regions.

Credentials JSON

Upload the JSON-formatted key file for the Google Cloud service account that will provide the model. Refer to the Vertex AI FAQ article for more information on how to retrieve your credentials.

Model

Select the desired Vertex AI model that will be provided by this integration account. Refer to the Google models documentation for information about model capabilities and pricing.

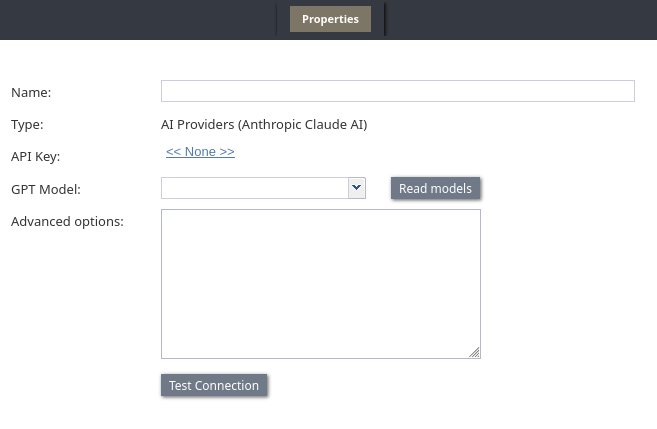

Anthropic Claude AI Properties

After adding an Anthropic Claude AI account, the properties dialog will appear. Enter the API key for your Anthropic developer account and click the Read Models button to retrieve the list of Claude models that can be accessed from your account. Once configured, the account can be selected as an AI Provider for relevant features, such as the AI Agent and Ask AI scenario blocks.

API Key

The Anthropic API key to use with this integration account. You can create and manage API keys from your Anthropic account console.

Model

Select the Claude model that this integration account will provide. If the list is empty, click the Read Models button to retrieve the list of models. Refer to the Anthropic models documentation for information about model capabilities and pricing.

Read Models

Click this button to refresh the list of Claude models available to your Anthropic account.

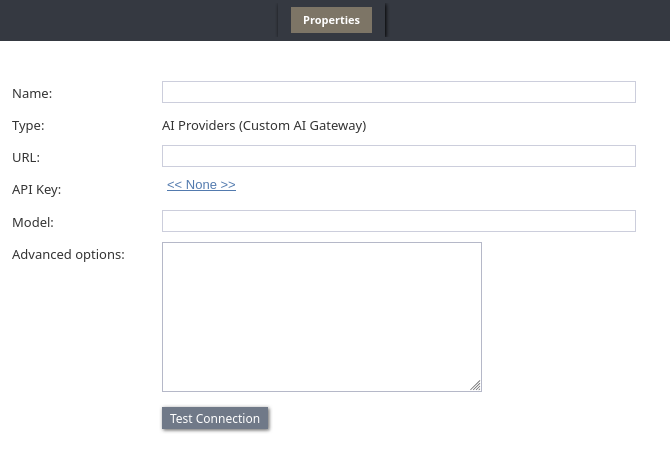

Custom AI Gateway Properties

After adding a Custom AI Gateway account, the properties dialog will appear. This account type allows you to integrate with any AI provider that offers an API method compatible with the OpenAI Chat Completions API endpoint. Once configured, the account can be selected as an AI Provider for relevant features, such as the AI Agent and Ask AI scenario blocks.

URL

The full path to the custom AI provider's API endpoint for chat completions (e.g., https://api.example.com/v1/chat/completions). The endpoint must conform to the OpenAI Chat Completions API specification. If the protocol (http:// or https://) is omitted, https:// is assumed.

API Key

The API key to use with the custom AI provider.

Model

Enter the exact Model ID that this integration account will provide (e.g., llama3-70b-8192). This value is defined by your AI provider.

Recommended Reading

For more information on using AI integrations, refer to the following: